Paul S. Randal At a Glance:

- Managing data and transaction log files

- Eliminating index fragmentation

- Ensuring accurate, up-to-date statistics

- Detecting corrupted database pages

- Establishing an effective backup strategy

Contents

Several times a week I'm asked for advice on how to effectively maintain a production database. Sometimes the questions come from DBAs who are implementing new solutions and want help

fine-tuning maintenance practices to fit their new databases' characteristics. More frequently, however, the questions come from people who are not professional DBAs but for one reason or another have been given ownership of and responsibility for a database. I like to call this role the "involuntary DBA." The focus of this article is to provide a primer of database maintenance best-practices for all the involuntary DBAs out there.

As with the majority of tasks and procedures in the IT world, there isn't an easy one-size-fits-all solution for effective database maintenance, but there are some key areas that nearly always need to be addressed. My top five areas of concern are (in no particular order of importance):

- Data and log file management

- Index fragmentation

- Statistics

- Corruption detection

- Backups

An unmaintained (or poorly maintained) database can develop problems in one or more of these areas, which can eventually lead to poor application performance or even downtime and data loss.

In this article, I'll explain why these issues matter and show you some simple ways to mitigate the problems. I will base my explanations on SQL Server® 2005, but I'll also highlight the major differences that you'll find in SQL Server 2000 and the upcoming SQL Server 2008.

Data and Log File Management

The first area I always recommend checking when taking over a database concerns the settings related to data and (transaction) log file management. Specifically, you should make sure that:

- The data and log files are separated from each other and isolated from everything else as well

- Auto-growth is configured correctly

- Instant file initialization is configured

- Auto-shrink is not enabled and shrink is not part of any maintenance plan

When data and log files (which ideally should be on separate volumes altogether) share a volume with any other application that creates or expands files, there is the potential for file fragmentation. In data files, excessive file fragmentation can be a small contributing factor in poorly performing queries (specifically those that scan very large amounts of data). In log files, it can have a much more significant impact on performance, especially if auto-growth is set to increase each file size only by a very small amount each time it is needed.

Log files are internally divided into sections called Virtual Log Files (VLFs) and the more fragmentation there is in the log file (I use the singular here because there is no gain from having multiple log files—there should only be one per database), the more VLFs there are. Once a log file has more than, say, 200 VLFs, performance can be negatively impacted for log-related operations such as log reads (for transactional replication/rollback, for example), log backups, and even triggers in SQL Server 2000 (the implementation of triggers changed in SQL Server 2005 to the row versioning framework instead of the transaction log).

The best practice regarding the sizing of data and log files is to create them with an appropriate initial size. For data files, the initial size should take into account the potential for additional data being added to the database in the short-term. For instance, if the initial size of the data is 50GB, but you know that over the next six months an additional 50GB of data will be added, it makes sense to create the data file to be 100GB right away, rather than having to grow it several times to reach that size.

It's a little more complicated for log files, unfortunately, and you need to consider factors like transaction size (long-running transactions cannot be cleared from the log until they complete) and log backup frequency (since this is what removes the inactive portion of the log). For more information, see "

8 Steps to Better Transaction Log Throughput", a popular blog post on SQLskills.com written by my wife, Kimberly Tripp.

Once set up, the file sizes should be monitored at various times and proactively grown manually at an appropriate time of day. Auto-grow should be left on as a just-in-case protection so the files can still grow if they need to if some abnormal event occurs. The logic against leaving file management entirely to auto-grow is that auto-grow of small amounts leads to file fragmentation, and that auto-grow can be a time-consuming process that stalls the application workload at unpredictable times.

The auto-grow size should be set to a specific value, rather than a percentage, to bound the time and space needed to perform the auto-grow, if it occurs. For instance, you may want to set a 100GB data file to have a fixed 5GB auto-grow size, rather than, say 10 percent. This means it will always grow by 5GB, no matter how large the file ends up being, rather than an ever-increasing amount (10GB, 11GB, 12GB, and so on) each time the file gets bigger.

When a transaction log is grown (either manually or through auto-grow), it is always zero-initialized. Data files have the same default behavior in SQL Server 2000, but starting with SQL Server 2005, you can enable instant file initialization, which skips zero-initializing the files and hence makes growth and auto-growth virtually instantaneous. Contrary to popular belief, this feature is available in all editions of SQL Server. For more information, enter "instant file initialization" in the index of Books Online for SQL Server 2005 or SQL Server 2008.

Finally, care should be taken that shrink is not enabled in any way. Shrink can be used to reduce the size of a data or log file, but it is a very intrusive, resource-heavy process that causes massive amounts of logical scan fragmentation in data files (see below for details) and leads to poor performance. I changed the SQL Server 2005 Books Online entry for shrink to include a warning to this effect. Manual shrinking of individual data and log files, however, can be acceptable under special circumstances.

Auto-shrink is the worst offender as it starts every 30 minutes in the background and tries to shrink databases where the auto-shrink database option is set to true. It is a somewhat unpredictable process in that it only shrinks databases with more than 25 percent free space. Auto-shrink uses lots of resources and causes performance-dropping fragmentation and so is not a good plan under any circumstances. You should always switch off auto-shrink with:

|

ALTER DATABASE MyDatabase SET AUTO_SHRINK OFF;

|

A regular maintenance plan that includes a manual database shrink command is almost as bad. If you find that your database continually grows after the maintenance plan shrinks it, that's because the database needs that space in which to run.

The best thing to do is allow the database to grow to a steady-state size and avoid running shrink altogether. You can find more information on the downsides of using shrink, plus some commentary on the new algorithms in SQL Server 2005 on my old MSDN

® blog at

blogs.msdn.com/sqlserverstorageengine/archive/tags/Shrink/default.aspx.

Index Fragmentation

Apart from fragmentation at the file-system level and within the log file, it's also possible to have fragmentation within the data files, in the structures that store the table and index data. There are two basic types of fragmentation that can occur within a data file:

- Fragmentation within individual data and index pages (sometimes called internal fragmentation)

- Fragmentation within index or table structures consisting of pages (called logical scan fragmentation and extent scan fragmentation)

Internal fragmentation is where there is a lot of empty space in a page. As

Figure 1 shows, each page in a database is 8KB in size and has a 96-byte page header; as a result, a page can store roughly 8096 bytes of table or index data (specific table and index internals for data and row structures can be found on my blog at

sqlskills.com/blogs/paul in the Inside The Storage Engine category). Empty space can occur if each table or index record is more than half the size of a page, as then only a single record can be stored per-page. This can be very hard or impossible to correct, as it would require a table or index schema change, for instance by changing an index key to be something that doesn't cause random insertion points like a GUID does.

Figure 1 The structure of a database page (Click the image for a larger view)

More commonly, internal fragmentation results from data modifications, such as inserts, updates, and deletes, which can leave empty space on a page. Mismanaged fill-factor can also contribute to fragmentation; see Books Online for more details. Depending on the table/index schema and the application's characteristics, this empty space may never be reused once it is created and can lead to ever-increasing amounts of unusable space in the database.

Consider, for instance, a 100-million-row table with an average record size of 400 bytes. Over time, the application's data modification pattern leaves each page with an average of 2800 bytes of free space. The total space required by the table is about 59GB, calculated as 8096-2800 / 400 = 13 records per 8KB page, then dividing 100 million by 13 to get the number of pages. If the space wasn't being wasted, then 20 records would fit per page, bringing the total space required down to 38GB. That's a huge savings!

Wasted space on data/index pages can therefore lead to needing more pages to hold the same amount of data. Not only does this take up more disk space, it also means that a query needs to issue more I/Os to read the same amount of data. And all these extra pages occupy more space in the data cache, thus taking up more server memory.

Logical scan fragmentation is caused by an operation called a page split. This occurs when a record has to be inserted on a specific index page (according to the index key definition) but there is not enough space on the page to fit the data being inserted. The page is split in half and roughly 50 percent of the records moved to a newly allocated page. This new page is usually not physically contiguous with the old page and therefore is called fragmented. Extent scan fragmentation is similar in concept. Fragmentation within the table/index structures affects the ability of SQL Server to do efficient scans, whether over an entire table/index or bounded by a query WHERE clause (such as SELECT * FROM MyTable WHERE Column1 > 100 AND Column1 < 4000).

Figure 2 shows newly created index pages with 100 percent fill-factor and no fragmentation—the pages are full and the physical order of the pages matches the logical order. Figure 3 shows the fragmentation that can occur after random inserts/updates/deletes.

Figure 2 Newly created index pages with no fragmentation; pages 100% full (Click the image for a larger view)

Figure 3 Index pages showing internal and logical scan fragmentation after random inserts, updates, and deletes (Click the image for a larger view)

Fragmentation can sometimes be prevented by changing the table/index schema, but as I mentioned above, this may be very difficult or impossible. If prevention is not an option, there are ways to remove fragmentation once it has occurred—in particular, by rebuilding or reorganizing an index.

Rebuilding an index involves creating a new copy of the index—nicely compacted and as contiguous as possible—and then dropping the old, fragmented one. As SQL Server creates a new copy of the index before removing the old one, it requires free space in the data files approximately equivalent to the size of the index. In SQL Server 2000, rebuilding an index was always an offline operation. In SQL Server 2005 Enterprise Edition, however, index rebuilding can take place online, with a few restrictions. Reorganizing, on the other hand, uses an in-place algorithm to compact and defragment the index; it requires only 8KB of additional space to run—and it always runs online. In fact, in SQL Server 2000, I specifically wrote the index reorganize code as an online, space-efficient alternative to rebuilding an index.

In SQL Server 2005, the commands to investigate are ALTER INDEX … REBUILD to rebuild indexes, and ALTER INDEX … REORGANIZE to reorganize them. This syntax replaces the SQL Server 2000 commands DBCC DBREINDEX and DBCC INDEXDEFRAG, respectively.

There are many trade-offs between these methods, such as the amount of transaction logging generated, the amount of free space in the database required, and whether the process is interruptible without loss of work. You'll find a white paper that discusses these trade-offs, and more, at

microsoft.com/technet/prodtechnol/sql/2000/maintain/ss2kidbp.mspx. The paper is based on SQL Server 2000 but the concepts translate well to later versions.

Some people simply choose to rebuild or reorganize all indexes every night or every week (using a maintenance plan option, for instance) rather than figuring out which indexes are fragmented and whether any benefit will come from removing the fragmentation. While that can be a good solution for an involuntary DBA who just wishes to put something in place with minimal effort, note that it can be a very poor choice for larger databases or systems where resources are at a premium.

A more sophisticated approach involves using the DMV sys.dm_db_index_physical_stats (or DBCC SHOWCONTIG in SQL Server 2000) to periodically determine which indexes are fragmented, and then choosing whether and how to operate on those. The whitepaper also discusses using these more targeted choices. Additionally, you can see some example code to do this filtering in Example D of the Books Online entry for the DMV sys.dm_db_index_physical_stats in SQL Server 2005 (

msdn.microsoft.com/library/ms188917) or Example E in the Books Online entry for DBCC SHOWCONTIG in SQL Server 2000 and later (at

msdn.microsoft.com/library/aa258803).

Whichever method you use, it is highly advisable to investigate and fix fragmentation on a regular basis.

Statistics

The Query Processor is the part of SQL Server that decides how a query should be executed—specifically, which tables and indexes to use, and which operations to perform on them to obtain the results; this is called a query plan. Some of the most important inputs into this decision-making process are statistics that describe the distribution of data values for columns within a table or index. Obviously, statistics need to be accurate and up-to-date to be useful to the Query Processor, otherwise poorly performing query plans may be chosen.

Statistics are generated by reading the table/index data and determining the data distribution for the relevant columns. Statistics can be built by scanning all the data values for a particular column (a full scan) but they can also be based on a user-specified percentage of the data (a sampled scan). If the distribution of values in a column is pretty even, then a sampled scan should be good enough, and this makes creating and updating the statistics faster than with a full scan.

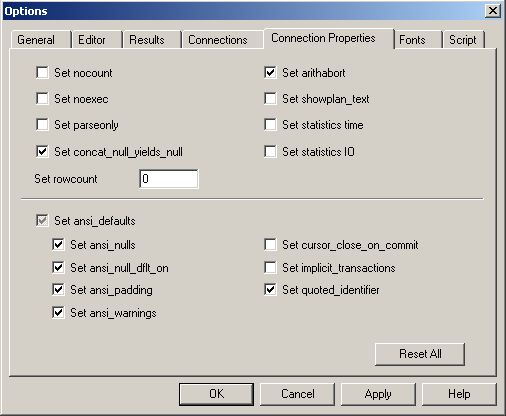

Note that statistics can be automatically created and maintained by turning on the AUTO_CREATE_STATISTICS and AUTO_UPDATE_STATISTICS database options, as shown in Figure 4. These are on by default but if you've just inherited a database, you may want to check to make sure. Sometimes statistics can become out-of-date, in which case manually updating them is possible using the UPDATE STATISTICS operation on specific sets of statistics. Alternatively, the sp_updatestats stored procedure can be used, which updates all statistics that are out of date (in SQL Server 2000, sp_updatestats updates all statistics, regardless of age).

Figure 4 Changing database settings through SQL Server Management Studio (Click the image for a larger view)

If you want to update statistics as part of your regular maintenance plan, there is a catch you should be aware of. Both UPDATE STATISTICS and sp_updatestats default to using the previously specified level of sampling (if any)—and this may be less than a full scan. Index rebuilds automatically update statistics with a full scan. If you manually update statistics after an index rebuild, it's possible to end up with less accurate statistics! This can happen if a sampled scan from the manual update overwrites the full scan generated by the index rebuild. On the other hand, reorganizing an index does not update statistics at all.

Again, many people have a maintenance plan that updates all statistics at some point before or after rebuilding all indexes—and so unknowingly they end up with potentially less accurate statistics. If you do choose to simply rebuild all indexes every so often, that will take care of statistics too. If you choose to go a more complex route with fragmentation removal, you should also do that for statistics maintenance. Here's what I suggest:

- Analyze indexes and determine which indexes to operate on and how to do the fragmentation removal.

- For all indexes that were not rebuilt, update the statistics.

- Update statistics for all of the non-indexed columns.

For more information on statistics, see the white paper "Statistics Used by the Query Optimizer in Microsoft

® SQL Server 2005" (

microsoft.com/technet/prodtechnol/sql/2005/qrystats.mspx).

Corruption Detection

I have discussed performance-related maintenance. Now I want to switch gears and discuss corruption detection and mitigation.

It's very unlikely that the database you're managing contains totally useless information that no one cares about—so how do you go about making sure that the data remains uncorrupted and recoverable in the event of a disaster? The ins-and-outs of putting together a full disaster-recovery and high-availability strategy are beyond the scope of this article, but there are a few simple things you can do to get started.

The overwhelming majority of corruptions are caused by "hardware." Why do I put it in quotes? Well, hardware here is really shorthand for "something in the I/O subsystem underneath SQL Server." The I/O subsystem consists of elements such as the operating system, file-system drivers, device-drivers, RAID controllers, cables, networks, and the actual disk drives themselves. That's a lot of places where problems can (and do) occur.

One of the most common problems is when a power failure occurs and a disk drive is in the middle of writing out a database page. If the drive cannot complete the write before it runs out of power (or write operations are cached and there isn't enough battery backup to flush the drive's cache) the result could be an incomplete page image on the disk. This can happen because an 8KB database page is actually comprised of 16 contiguous 512-byte disk sectors. An incomplete write could have written some of the sectors from the new page but leave some of the sectors from the previous page image. This situation is called a torn page. How can you detect when this happens?

SQL Server has a mechanism to detect this situation. It involves storing a couple of bits from every sector of the page and writing a specific pattern in their place (this happens just before the page is written to disk). If the pattern is not the same when the page is read back in, SQL Server knows the page was "torn" and raises an error.

In SQL Server 2005 and later, a more comprehensive mechanism called page checksums is available that can detect any corruption on a page. This involves writing a whole-page checksum on the page just before it is written out and then testing it when the page is read back in, just as for torn-page detection. After enabling page checksums, a page needs to be read into the buffer pool, changed in some way, and then written out to disk again before it is protected by a page checksum.

So, it is a best practice to have page checksums enabled for SQL Server 2005 onwards, with torn-page detection enabled for SQL Server 2000. To enable page checksums, use:

|

ALTER DATABASE MyDatabase SET PAGE_VERIFY CHECKSUM;

|

To enable torn-page detection for SQL Server 2000, use this:

|

ALTER DATABASE MyDatabase SET TORN_PAGE_DETECTION ON;

|

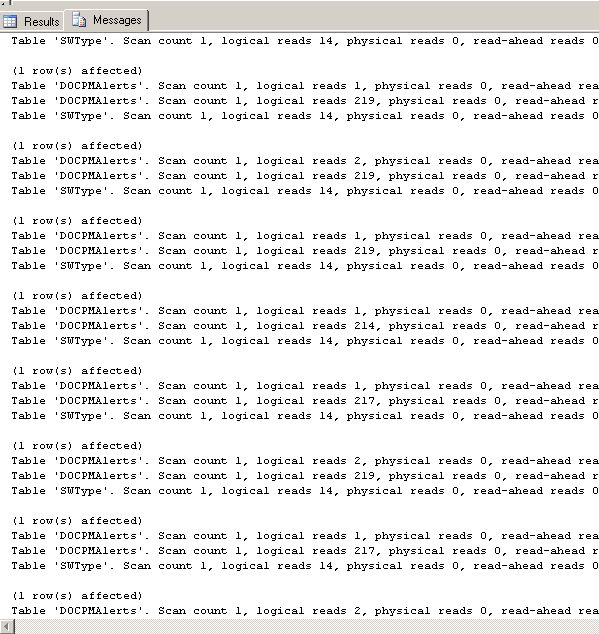

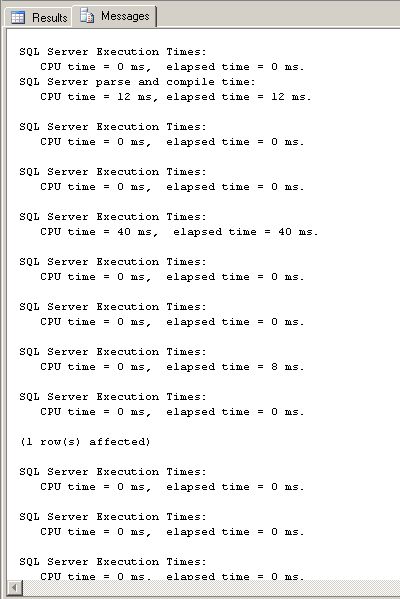

These mechanisms allow you to detect when a page has a corruption on it, but only when the page is read. How can you easily force all the allocated pages to be read? The best method for doing this (and finding any other kinds of corruption) is to use the DBCC CHECKDB command. Regardless of the options specified, this command will always read all pages in the database, thus causing any page checksums or torn-page detection to be verified. You should also set up alerts so you know when users encounter corruption problems when running queries. You can be notified of all the problems described above using an alert for Severity 24 errors (Figure 5).

Figure 5 Setting up an alert for all Severity 24 errors (Click the image for a larger view)

So another best practice is to regularly run DBCC CHECKDB on databases to verify their integrity. There are many variations on this command and questions around how often to run it. Unfortunately, there is no white paper available that discusses this. However, as DBCC CHECKDB was the main piece of code I wrote for SQL Server 2005, I have blogged extensively about it. See the "CHECKDB From Every Angle" category of my blog (

sqlskills.com/blogs/paul) for many in-depth articles on consistency checking, best practices, and how-to advice. For involuntary DBAs, the rule of thumb is to run a DBCC CHECKDB as often as you take a full database backup (more on this below). I recommend running the following command:

|

DBCC CHECKDB ('MyDatabase') WITH NO_INFOMSGS,

ALL_ERRORMSGS;

|

If there is any output from this command, DBCC has found some corruptions in the database. The question then becomes what to do if DBCC CHECKDB finds any corruptions. That's where backups come in.

Backups

When a corruption or other disaster occurs, the most effective way to recover is to restore the database from backups. Now, this assumes that you have backups in the first place, and that they're not corrupt themselves. All too often, people want to know how to get a badly corrupted database running again when they don't have a backup. The simple answer is that you can't, not without experiencing some form of data loss that could play havoc with your business logic and relational data integrity.

So there is a very strong case for taking regular backups. The intricacies of using backup and restore are well beyond the scope of this article, but let me give you a quick primer on how to establish a backup strategy.

First, you should take regular full-database backups. This gives you a single point-in-time to which you can later restore. You can take a full-database backup using the BACKUP DATABASE command. Look in Books Online for examples. For added protection, you can use the WITH CHECKSUM option, which verifies the page checksums (if present) of pages being read and calculates a checksum over the entire backup. You should choose a frequency that reflects how much data or work your business is comfortable losing. For example, taking a full database backup once per day means you may lose up to a day's worth of data in the event of a disaster. If you are only using full database backups, you should be in the SIMPLE recovery model (commonly called recovery mode) to avoid complexities relating to transaction log growth management.

Second, always keep the backups around for a few days in case one becomes corrupt—a backup from a few days ago is better than no backup at all. You should also verify the integrity of your backups using the RESTORE WITH VERIFYONLY command (again, see Books Online). If you used the WITH CHECKSUM option when the backup was created, running the verification command will check that the backup checksum is still valid, as well as re-check all the page checksums of pages within the backup.

Third, if a daily full database backup does not allow you to meet the maximum data/work loss your business can sustain, you may want to investigate differential database backups. A differential database backup is based on a full database backup and contains a record of all the changes since the last full database backup (a common misconception is that differential backups are incremental—they are not). A sample strategy might be to take a daily full database backup, with a differential database backup every four hours. A differential backup provides a single extra point-in-time recovery option. If you are only using full database and differential database backups, you should still be using the SIMPLE recovery model.

Finally, the ultimate in recoverability comes with using log backups. These are only available in the FULL (or BULK_LOGGED) recovery models and provide a backup of all the log records generated since the previous log backup. Maintaining a set of log backups with periodic full database (and maybe differential database) backups gives an unlimited number of points-in-time to recover to—including up-to-the-minute recovery. The trade-off is that the transaction log will continue to grow unless it is "freed" by taking a log backup. A sample strategy here would be a full database backup every day, a differential database backup every four hours, and a log backup every half hour.

Deciding on a backup strategy and setting it up can be complicated. At the very least, you should have a regular full-database backup to ensure you have at least one point-in-time to recover from.

Wrap-Up

As you can see, to ensure your database stays healthy and available there are a few "'must do" tasks. Here's my final checklist for an involuntary DBA taking over a database:

- Remove excessive transaction log file fragmentation.

- Set auto-growth correctly.

- Turn off any scheduled shrink operations.

- Turn on instant file initialization.

- Put a regular process in place to detect and remove index fragmentation.

- Turn on AUTO_CREATE_STATISTICS and AUTO_UPDATE_STATISTICS, plus have a regular process in place to update statistics.

- Turn on page checksums (or least torn-page detection on SQL Server 2000).

- Have a regular process to run DBCC CHECKDB.

- Have a regular process in place to take full database backups, plus differential and log backups for point-in-time recovery.

I've given T-SQL commands within the article, but you can do a lot from Management Studio too. Hopefully, I have given you some useful pointers for effective database maintenance.

Contents

Contents